diff --git a/Makefile b/Makefile

index 03a048d..9ff6a24 100644

--- a/Makefile

+++ b/Makefile

@@ -11,7 +11,6 @@ debug:

@echo "GOPATH: $(shell go env GOPATH)"

@which go

@which gofumpt

- @which gci

@which golangci-lint

# Test

@@ -19,13 +18,11 @@ test:

go test ./...

tidy:

- go work sync

- #go mod tidy

+ go mod tidy

# Format code

fmt:

gofumpt -l -w .

- gci write .

# Run linter

lint: fmt

@@ -35,9 +32,9 @@ lint: fmt

lintfix: fmt

golangci-lint run --fix

-build:

+build: clean

mkdir -p ./dist

- CGO_ENABLED=0 go build -o ./dist/gemserve ./main.go

+ go build -mod=vendor -o ./dist/gemserve ./main.go

build-docker: build

docker build -t gemserve .

diff --git a/config/config.go b/config/config.go

index cdded94..628b10c 100644

--- a/config/config.go

+++ b/config/config.go

@@ -15,6 +15,8 @@ type Config struct {

RootPath string // Path to serve files from

DirIndexingEnabled bool // Allow client to browse directories or not

ListenAddr string // Address to listen on

+ TLSCert string // TLS certificate file

+ TLSKey string // TLS key file

}

var CONFIG Config //nolint:gochecknoglobals

@@ -43,6 +45,8 @@ func GetConfig() *Config {

rootPath := flag.String("root-path", "", "Path to serve files from")

dirIndexing := flag.Bool("dir-indexing", false, "Allow client to browse directories")

listen := flag.String("listen", "localhost:1965", "Address to listen on")

+ tlsCert := flag.String("tls-cert", "certs/server.crt", "TLS certificate file")

+ tlsKey := flag.String("tls-key", "certs/server.key", "TLS key file")

flag.Parse()

@@ -65,5 +69,7 @@ func GetConfig() *Config {

RootPath: *rootPath,

DirIndexingEnabled: *dirIndexing,

ListenAddr: *listen,

+ TLSCert: *tlsCert,

+ TLSKey: *tlsKey,

}

}

diff --git a/gemini/status_codes.go b/gemini/statusCodes.go

similarity index 100%

rename from gemini/status_codes.go

rename to gemini/statusCodes.go

diff --git a/gemini/url.go b/gemini/url.go

index b4bd15a..11c132d 100644

--- a/gemini/url.go

+++ b/gemini/url.go

@@ -8,7 +8,7 @@ import (

"strconv"

"strings"

- "git.antanst.com/antanst/xerrors"

+ "gemserve/lib/apperrors"

)

type URL struct {

@@ -28,7 +28,7 @@ func (u *URL) Scan(value interface{}) error {

}

b, ok := value.(string)

if !ok {

- return xerrors.NewError(fmt.Errorf("database scan error: expected string, got %T", value), 0, "Database scan error", true)

+ return apperrors.NewFatalError(fmt.Errorf("database scan error: expected string, got %T", value))

}

parsedURL, err := ParseURL(b, "", false)

if err != nil {

@@ -67,7 +67,7 @@ func ParseURL(input string, descr string, normalize bool) (*URL, error) {

} else {

u, err = url.Parse(input)

if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("error parsing URL: %w: %s", err, input), 0, "URL parse error", false)

+ return nil, fmt.Errorf("error parsing URL: %w: %s", err, input)

}

}

@@ -80,7 +80,7 @@ func ParseURL(input string, descr string, normalize bool) (*URL, error) {

}

port, err := strconv.Atoi(strPort)

if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("error parsing URL: %w: %s", err, input), 0, "URL parse error", false)

+ return nil, fmt.Errorf("error parsing URL: %w: %s", err, input)

}

full := fmt.Sprintf("%s://%s:%d%s", protocol, hostname, port, urlPath)

// full field should also contain query params and url fragments

@@ -126,13 +126,13 @@ func NormalizeURL(rawURL string) (*url.URL, error) {

// Parse the URL

u, err := url.Parse(rawURL)

if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("error normalizing URL: %w: %s", err, rawURL), 0, "URL normalization error", false)

+ return nil, fmt.Errorf("error normalizing URL: %w: %s", err, rawURL)

}

if u.Scheme == "" {

- return nil, xerrors.NewError(fmt.Errorf("error normalizing URL: No scheme: %s", rawURL), 0, "Missing URL scheme", false)

+ return nil, fmt.Errorf("error normalizing URL: No scheme: %s", rawURL)

}

if u.Host == "" {

- return nil, xerrors.NewError(fmt.Errorf("error normalizing URL: No host: %s", rawURL), 0, "Missing URL host", false)

+ return nil, fmt.Errorf("error normalizing URL: No host: %s", rawURL)

}

// Convert scheme to lowercase

diff --git a/gemini/url_test.go b/gemini/url_test.go

index 8a13e60..ce570ca 100644

--- a/gemini/url_test.go

+++ b/gemini/url_test.go

@@ -11,7 +11,7 @@ func TestParseURL(t *testing.T) {

input := "gemini://caolan.uk/cgi-bin/weather.py/wxfcs/3162"

parsed, err := ParseURL(input, "", true)

value, _ := parsed.Value()

- if err != nil || !(value == "gemini://caolan.uk:1965/cgi-bin/weather.py/wxfcs/3162") {

+ if err != nil || (value != "gemini://caolan.uk:1965/cgi-bin/weather.py/wxfcs/3162") {

t.Errorf("fail: %s", parsed)

}

}

diff --git a/go.mod b/go.mod

index 2fc79ba..35fd2d6 100644

--- a/go.mod

+++ b/go.mod

@@ -2,20 +2,11 @@ module gemserve

go 1.24.3

-toolchain go1.24.4

-

require (

- git.antanst.com/antanst/logging v0.0.1

git.antanst.com/antanst/uid v0.0.1

- git.antanst.com/antanst/xerrors v0.0.1

- github.com/gabriel-vasile/mimetype v1.4.8

+ github.com/gabriel-vasile/mimetype v1.4.10

+ github.com/lmittmann/tint v1.1.2

github.com/matoous/go-nanoid/v2 v2.1.0

)

-require golang.org/x/net v0.33.0 // indirect

-

-replace git.antanst.com/antanst/xerrors => ../xerrors

-

replace git.antanst.com/antanst/uid => ../uid

-

-replace git.antanst.com/antanst/logging => ../logging

diff --git a/go.sum b/go.sum

index fe54b22..1e902e5 100644

--- a/go.sum

+++ b/go.sum

@@ -1,14 +1,14 @@

github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c=

github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

-github.com/gabriel-vasile/mimetype v1.4.8 h1:FfZ3gj38NjllZIeJAmMhr+qKL8Wu+nOoI3GqacKw1NM=

-github.com/gabriel-vasile/mimetype v1.4.8/go.mod h1:ByKUIKGjh1ODkGM1asKUbQZOLGrPjydw3hYPU2YU9t8=

+github.com/gabriel-vasile/mimetype v1.4.10 h1:zyueNbySn/z8mJZHLt6IPw0KoZsiQNszIpU+bX4+ZK0=

+github.com/gabriel-vasile/mimetype v1.4.10/go.mod h1:d+9Oxyo1wTzWdyVUPMmXFvp4F9tea18J8ufA774AB3s=

+github.com/lmittmann/tint v1.1.2 h1:2CQzrL6rslrsyjqLDwD11bZ5OpLBPU+g3G/r5LSfS8w=

+github.com/lmittmann/tint v1.1.2/go.mod h1:HIS3gSy7qNwGCj+5oRjAutErFBl4BzdQP6cJZ0NfMwE=

github.com/matoous/go-nanoid/v2 v2.1.0 h1:P64+dmq21hhWdtvZfEAofnvJULaRR1Yib0+PnU669bE=

github.com/matoous/go-nanoid/v2 v2.1.0/go.mod h1:KlbGNQ+FhrUNIHUxZdL63t7tl4LaPkZNpUULS8H4uVM=

github.com/pmezard/go-difflib v1.0.0 h1:4DBwDE0NGyQoBHbLQYPwSUPoCMWR5BEzIk/f1lZbAQM=

github.com/pmezard/go-difflib v1.0.0/go.mod h1:iKH77koFhYxTK1pcRnkKkqfTogsbg7gZNVY4sRDYZ/4=

github.com/stretchr/testify v1.9.0 h1:HtqpIVDClZ4nwg75+f6Lvsy/wHu+3BoSGCbBAcpTsTg=

github.com/stretchr/testify v1.9.0/go.mod h1:r2ic/lqez/lEtzL7wO/rwa5dbSLXVDPFyf8C91i36aY=

-golang.org/x/net v0.33.0 h1:74SYHlV8BIgHIFC/LrYkOGIwL19eTYXQ5wc6TBuO36I=

-golang.org/x/net v0.33.0/go.mod h1:HXLR5J+9DxmrqMwG9qjGCxZ+zKXxBru04zlTvWlWuN4=

gopkg.in/yaml.v3 v3.0.1 h1:fxVm/GzAzEWqLHuvctI91KS9hhNmmWOoWu0XTYJS7CA=

gopkg.in/yaml.v3 v3.0.1/go.mod h1:K4uyk7z7BCEPqu6E+C64Yfv1cQ7kz7rIZviUmN+EgEM=

diff --git a/lib/apperrors/errors.go b/lib/apperrors/errors.go

new file mode 100644

index 0000000..f3992a8

--- /dev/null

+++ b/lib/apperrors/errors.go

@@ -0,0 +1,94 @@

+package apperrors

+

+import "errors"

+

+type ErrorType int

+

+const (

+ ErrorOther ErrorType = iota

+ ErrorNetwork

+ ErrorGemini

+)

+

+type AppError struct {

+ Type ErrorType

+ StatusCode int

+ Fatal bool

+ Err error

+}

+

+func (e *AppError) Error() string {

+ if e == nil || e.Err == nil {

+ return ""

+ }

+ return e.Err.Error()

+}

+

+func (e *AppError) Unwrap() error {

+ if e == nil {

+ return nil

+ }

+ return e.Err

+}

+

+func NewError(err error) error {

+ return &AppError{

+ Type: ErrorOther,

+ StatusCode: 0,

+ Fatal: false,

+ Err: err,

+ }

+}

+

+func NewFatalError(err error) error {

+ return &AppError{

+ Type: ErrorOther,

+ StatusCode: 0,

+ Fatal: true,

+ Err: err,

+ }

+}

+

+func NewNetworkError(err error) error {

+ return &AppError{

+ Type: ErrorNetwork,

+ StatusCode: 0,

+ Fatal: false,

+ Err: err,

+ }

+}

+

+func NewGeminiError(err error, statusCode int) error {

+ return &AppError{

+ Type: ErrorGemini,

+ StatusCode: statusCode,

+ Fatal: false,

+ Err: err,

+ }

+}

+

+func GetStatusCode(err error) int {

+ var appError *AppError

+ if errors.As(err, &appError) && appError != nil {

+ return appError.StatusCode

+ }

+ return 0

+}

+

+func IsGeminiError(err error) bool {

+ var appError *AppError

+ if errors.As(err, &appError) && appError != nil {

+ if appError.Type == ErrorGemini {

+ return true

+ }

+ }

+ return false

+}

+

+func IsFatal(err error) bool {

+ var appError *AppError

+ if errors.As(err, &appError) && appError != nil {

+ return appError.Fatal

+ }

+ return false

+}

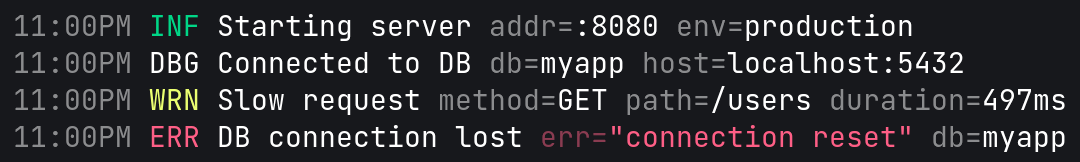

diff --git a/lib/logging/logging.go b/lib/logging/logging.go

new file mode 100644

index 0000000..66d8a36

--- /dev/null

+++ b/lib/logging/logging.go

@@ -0,0 +1,74 @@

+package logging

+

+import (

+ "context"

+ "log/slog"

+ "os"

+ "path/filepath"

+

+ "gemserve/config"

+

+ "github.com/lmittmann/tint"

+)

+

+type contextKey int

+

+const loggerKey contextKey = 0

+

+var (

+ programLevel *slog.LevelVar = new(slog.LevelVar) // Info by default

+ Logger *slog.Logger

+)

+

+func WithLogger(ctx context.Context, logger *slog.Logger) context.Context {

+ return context.WithValue(ctx, loggerKey, logger)

+}

+

+func FromContext(ctx context.Context) *slog.Logger {

+ if logger, ok := ctx.Value(loggerKey).(*slog.Logger); ok {

+ return logger

+ }

+ // Return default logger instead of panicking

+ return slog.Default()

+}

+

+func SetupLogging() {

+ programLevel.Set(config.CONFIG.LogLevel)

+ // With coloring (uses external package)

+ opts := &tint.Options{

+ AddSource: true,

+ Level: programLevel,

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Key == slog.SourceKey {

+ if source, ok := a.Value.Any().(*slog.Source); ok {

+ source.File = filepath.Base(source.File)

+ }

+ }

+ // Customize level colors:

+ // gray for debug, blue for info,

+ // yellow for warnings, black on red bg for errors

+ if a.Key == slog.LevelKey && len(groups) == 0 {

+ level, ok := a.Value.Any().(slog.Level)

+ if ok {

+ switch level {

+ case slog.LevelDebug:

+ // Use grayscale color (232-255 range) for gray/faint debug messages

+ return tint.Attr(240, a)

+ case slog.LevelInfo:

+ // Use color code 12 (bright blue) for info

+ return tint.Attr(12, a)

+ case slog.LevelWarn:

+ // Use color code 11 (bright yellow) for warnings

+ return tint.Attr(11, a)

+ case slog.LevelError:

+ // For black on red background, we need to modify the string value directly

+ // since tint.Attr() only supports foreground colors

+ return slog.String(a.Key, "\033[30;41m"+a.Value.String()+"\033[0m")

+ }

+ }

+ }

+ return a

+ },

+ }

+ Logger = slog.New(tint.NewHandler(os.Stdout, opts))

+}

diff --git a/main.go b/main.go

index 1832fbb..70f59a9 100644

--- a/main.go

+++ b/main.go

@@ -2,6 +2,7 @@ package main

import (

"bytes"

+ "context"

"crypto/tls"

"errors"

"fmt"

@@ -10,68 +11,87 @@ import (

"os"

"os/signal"

"strings"

+ "sync"

"syscall"

"time"

+ "gemserve/lib/apperrors"

+ "gemserve/lib/logging"

+

"gemserve/config"

"gemserve/gemini"

"gemserve/server"

- logging "git.antanst.com/antanst/logging"

- "git.antanst.com/antanst/uid"

- "git.antanst.com/antanst/xerrors"

-)

-// This channel is for handling fatal errors

-// from anywhere in the app. The consumer

-// should log and panic.

-var fatalErrors chan error

+ "git.antanst.com/antanst/uid"

+)

func main() {

config.CONFIG = *config.GetConfig()

-

- logging.InitSlogger(config.CONFIG.LogLevel)

-

- err := runApp()

+ logging.SetupLogging()

+ logger := logging.Logger

+ ctx := logging.WithLogger(context.Background(), logger)

+ err := runApp(ctx)

if err != nil {

+ logger.Error(fmt.Sprintf("Fatal Error: %v", err))

panic(fmt.Sprintf("Fatal Error: %v", err))

}

os.Exit(0)

}

-func runApp() error {

- logging.LogInfo("Starting up. Press Ctrl+C to exit")

+func runApp(ctx context.Context) error {

+ logger := logging.FromContext(ctx)

+ logger.Info("Starting up. Press Ctrl+C to exit")

listenAddr := config.CONFIG.ListenAddr

signals := make(chan os.Signal, 1)

signal.Notify(signals, syscall.SIGINT, syscall.SIGTERM)

- fatalErrors = make(chan error)

+ fatalErrors := make(chan error)

+ // Root server context, used to cancel

+ // connections and graceful shutdown.

+ serverCtx, cancel := context.WithCancel(ctx)

+ defer cancel()

+

+ // WaitGroup to track active connections

+ var wg sync.WaitGroup

+

+ // Spawn server on the background.

+ // Returned errors are considered fatal.

go func() {

- err := startServer(listenAddr)

+ err := startServer(serverCtx, listenAddr, &wg, fatalErrors)

if err != nil {

- fatalErrors <- xerrors.NewError(err, 0, "Server startup failed", true)

+ fatalErrors <- apperrors.NewFatalError(fmt.Errorf("server startup failed: %w", err))

}

}()

for {

select {

case <-signals:

- logging.LogWarn("Received SIGINT or SIGTERM signal, exiting")

+ logger.Warn("Received SIGINT or SIGTERM signal, shutting down gracefully")

+ cancel()

+ wg.Wait()

return nil

case fatalError := <-fatalErrors:

- return xerrors.NewError(fatalError, 0, "Server error", true)

+ cancel()

+ wg.Wait()

+ return fatalError

}

}

}

-func startServer(listenAddr string) (err error) {

- cert, err := tls.LoadX509KeyPair("certs/server.crt", "certs/server.key")

+func startServer(ctx context.Context, listenAddr string, wg *sync.WaitGroup, fatalErrors chan<- error) (err error) {

+ logger := logging.FromContext(ctx)

+

+ cert, err := tls.LoadX509KeyPair(config.CONFIG.TLSCert, config.CONFIG.TLSKey)

if err != nil {

- return xerrors.NewError(fmt.Errorf("failed to load certificate: %w", err), 0, "Certificate loading failed", true)

+ return apperrors.NewFatalError(fmt.Errorf("failed to load TLS certificate/key: %w", err))

}

+ logger.Debug("Using TLS cert", "path", config.CONFIG.TLSCert)

+ logger.Debug("Using TLS key", "path", config.CONFIG.TLSKey)

+

tlsConfig := &tls.Config{

Certificates: []tls.Certificate{cert},

MinVersion: tls.VersionTLS12,

@@ -79,39 +99,58 @@ func startServer(listenAddr string) (err error) {

listener, err := tls.Listen("tcp", listenAddr, tlsConfig)

if err != nil {

- return xerrors.NewError(fmt.Errorf("failed to create listener: %w", err), 0, "Listener creation failed", true)

+ return apperrors.NewFatalError(err)

}

+

defer func(listener net.Listener) {

- // If we've got an error closing the

- // listener, make sure we don't override

- // the original error (if not nil)

- errClose := listener.Close()

- if errClose != nil && err == nil {

- err = xerrors.NewError(err, 0, "Listener close failed", true)

- }

+ _ = listener.Close()

}(listener)

- logging.LogInfo("Server listening on %s", listenAddr)

+ // If context is cancelled, close listener

+ // to unblock Accept() inside main loop.

+ go func() {

+ <-ctx.Done()

+ _ = listener.Close()

+ }()

+

+ logger.Info("Server listening", "address", listenAddr)

for {

conn, err := listener.Accept()

if err != nil {

- logging.LogInfo("Failed to accept connection: %v", err)

+ if ctx.Err() != nil {

+ return nil

+ } // ctx cancellation

+ logger.Info("Failed to accept connection: %v", "error", err)

continue

}

+ wg.Add(1)

go func() {

+ defer wg.Done()

+

+ // Type assert the connection to TLS connection

+ tlsConn, ok := conn.(*tls.Conn)

+ if !ok {

+ logger.Error("Connection is not a TLS connection")

+ _ = conn.Close()

+ return

+ }

+

remoteAddr := conn.RemoteAddr().String()

connId := uid.UID()

- err := handleConnection(conn.(*tls.Conn), connId, remoteAddr)

+

+ // Create a timeout context for this connection

+ connCtx, cancel := context.WithTimeout(ctx, time.Duration(config.CONFIG.ResponseTimeout)*time.Second)

+ defer cancel()

+

+ err := handleConnection(connCtx, tlsConn, connId, remoteAddr)

if err != nil {

- var asErr *xerrors.XError

- if errors.As(err, &asErr) && asErr.IsFatal {

- fatalErrors <- asErr

+ if apperrors.IsFatal(err) {

+ fatalErrors <- err

return

- } else {

- logging.LogWarn("%s %s Connection failed: %v", connId, remoteAddr, err)

}

+ logger.Info("Connection failed", "id", connId, "remoteAddr", remoteAddr, "error", err)

}

}()

}

@@ -120,19 +159,25 @@ func startServer(listenAddr string) (err error) {

func closeConnection(conn *tls.Conn) error {

err := conn.CloseWrite()

if err != nil {

- return xerrors.NewError(fmt.Errorf("failed to close TLS connection: %w", err), 50, "Connection close failed", false)

+ return apperrors.NewNetworkError(fmt.Errorf("failed to close TLS connection: %w", err))

}

err = conn.Close()

if err != nil {

- return xerrors.NewError(fmt.Errorf("failed to close connection: %w", err), 50, "Connection close failed", false)

+ return apperrors.NewNetworkError(fmt.Errorf("failed to close connection: %w", err))

}

return nil

}

-func handleConnection(conn *tls.Conn, connId string, remoteAddr string) (err error) {

+func handleConnection(ctx context.Context, conn *tls.Conn, connId string, remoteAddr string) (err error) {

+ logger := logging.FromContext(ctx)

start := time.Now()

var outputBytes []byte

+ // Set connection deadline based on context

+ if deadline, ok := ctx.Deadline(); ok {

+ _ = conn.SetDeadline(deadline)

+ }

+

defer func(conn *tls.Conn) {

end := time.Now()

tookMs := end.Sub(start).Milliseconds()

@@ -144,54 +189,82 @@ func handleConnection(conn *tls.Conn, connId string, remoteAddr string) (err err

if i := bytes.Index(outputBytes, []byte{'\r'}); i >= 0 {

responseHeader = string(outputBytes[:i])

}

- logging.LogInfo("%s %s response %s (%dms)", connId, remoteAddr, responseHeader, tookMs)

+ logger.Info("Response", "connId", connId, "remoteAddr", remoteAddr, "responseHeader", responseHeader, "ms", tookMs)

+ _ = closeConnection(conn)

+ return

+ }

+

+ // Handle context cancellation/timeout

+ if errors.Is(err, context.DeadlineExceeded) {

+ logger.Info("Connection timeout", "id", connId, "remoteAddr", remoteAddr, "ms", tookMs)

+ responseHeader = fmt.Sprintf("%d Request timeout", gemini.StatusCGIError)

+ _, _ = conn.Write([]byte(responseHeader + "\r\n"))

+ _ = closeConnection(conn)

+ return

+ }

+ if errors.Is(err, context.Canceled) {

+ logger.Info("Connection cancelled", "id", connId, "remoteAddr", remoteAddr, "ms", tookMs)

_ = closeConnection(conn)

return

}

var code int

var responseMsg string

- var xErr *xerrors.XError

- if errors.As(err, &xErr) {

- // On fatal errors, immediatelly return the error.

- if xErr.IsFatal {

- _ = closeConnection(conn)

- return

- }

- code = xErr.Code

- responseMsg = xErr.UserMsg

+ if apperrors.IsFatal(err) {

+ _ = closeConnection(conn)

+ return

+ }

+ if apperrors.IsGeminiError(err) {

+ code = apperrors.GetStatusCode(err)

+ responseMsg = "server error"

} else {

code = gemini.StatusPermanentFailure

responseMsg = "server error"

}

responseHeader = fmt.Sprintf("%d %s", code, responseMsg)

- _, _ = conn.Write([]byte(responseHeader))

+ _, _ = conn.Write([]byte(responseHeader + "\r\n"))

_ = closeConnection(conn)

}(conn)

+ // Check context before starting

+ if err := ctx.Err(); err != nil {

+ return err

+ }

+

// Gemini is supposed to have a 1kb limit

// on input requests.

buffer := make([]byte, 1025)

n, err := conn.Read(buffer)

if err != nil && err != io.EOF {

- return xerrors.NewError(fmt.Errorf("failed to read connection data: %w", err), 59, "Connection read failed", false)

+ return apperrors.NewGeminiError(fmt.Errorf("failed to read connection data: %w", err), gemini.StatusBadRequest)

}

if n == 0 {

- return xerrors.NewError(fmt.Errorf("client did not send data"), 59, "No data received", false)

+ return apperrors.NewGeminiError(fmt.Errorf("client did not send data"), gemini.StatusBadRequest)

}

if n > 1024 {

- return xerrors.NewError(fmt.Errorf("client request size %d > 1024 bytes", n), 59, "Request too large", false)

+ return apperrors.NewGeminiError(fmt.Errorf("client request size %d > 1024 bytes", n), gemini.StatusBadRequest)

+ }

+

+ // Check context after read

+ if err := ctx.Err(); err != nil {

+ return err

}

dataBytes := buffer[:n]

dataString := string(dataBytes)

- logging.LogInfo("%s %s request %s (%d bytes)", connId, remoteAddr, strings.TrimSpace(dataString), len(dataBytes))

- outputBytes, err = server.GenerateResponse(conn, connId, dataString)

+ logger.Info("Request", "id", connId, "remoteAddr", remoteAddr, "data", strings.TrimSpace(dataString), "size", len(dataBytes))

+ outputBytes, err = server.GenerateResponse(ctx, conn, connId, dataString)

if err != nil {

return err

}

+

+ // Check context before write

+ if err := ctx.Err(); err != nil {

+ return err

+ }

+

_, err = conn.Write(outputBytes)

if err != nil {

return err

diff --git a/server/server.go b/server/server.go

index 795ce45..0742982 100644

--- a/server/server.go

+++ b/server/server.go

@@ -1,10 +1,11 @@

package server

import (

+ "context"

"crypto/tls"

- "errors"

"fmt"

"net"

+ "net/url"

"os"

"path"

"path/filepath"

@@ -12,10 +13,12 @@ import (

"strings"

"unicode/utf8"

+ "gemserve/lib/apperrors"

+ "gemserve/lib/logging"

+

"gemserve/config"

"gemserve/gemini"

- "git.antanst.com/antanst/logging"

- "git.antanst.com/antanst/xerrors"

+

"github.com/gabriel-vasile/mimetype"

)

@@ -26,35 +29,36 @@ type ServerConfig interface {

func checkRequestURL(url *gemini.URL) error {

if !utf8.ValidString(url.String()) {

- return xerrors.NewError(fmt.Errorf("invalid URL"), gemini.StatusBadRequest, "Invalid URL", false)

+ return apperrors.NewGeminiError(fmt.Errorf("invalid URL"), gemini.StatusBadRequest)

}

if url.Protocol != "gemini" {

- return xerrors.NewError(fmt.Errorf("invalid URL"), gemini.StatusProxyRequestRefused, "URL Protocol not Gemini, proxying refused", false)

+ return apperrors.NewGeminiError(fmt.Errorf("invalid URL"), gemini.StatusProxyRequestRefused)

}

_, portStr, err := net.SplitHostPort(config.CONFIG.ListenAddr)

if err != nil {

- return xerrors.NewError(fmt.Errorf("failed to parse server listen address: %w", err), 0, "", true)

+ return apperrors.NewGeminiError(fmt.Errorf("failed to parse server listen address: %w", err), gemini.StatusBadRequest)

}

listenPort, err := strconv.Atoi(portStr)

if err != nil {

- return xerrors.NewError(fmt.Errorf("invalid server listen port: %w", err), 0, "", true)

+ return apperrors.NewGeminiError(fmt.Errorf("invalid server listen port: %w", err), gemini.StatusBadRequest)

}

if url.Port != listenPort {

- return xerrors.NewError(fmt.Errorf("failed to parse URL: %w", err), gemini.StatusProxyRequestRefused, "invalid request port, proxying refused", false)

+ return apperrors.NewGeminiError(fmt.Errorf("failed to parse URL: %w", err), gemini.StatusProxyRequestRefused)

}

return nil

}

-func GenerateResponse(conn *tls.Conn, connId string, input string) ([]byte, error) {

+func GenerateResponse(ctx context.Context, conn *tls.Conn, connId string, input string) ([]byte, error) {

+ logger := logging.FromContext(ctx)

trimmedInput := strings.TrimSpace(input)

// url will have a cleaned and normalized path after this

url, err := gemini.ParseURL(trimmedInput, "", true)

if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("failed to parse URL: %w", err), gemini.StatusBadRequest, "Invalid URL", false)

+ return nil, apperrors.NewGeminiError(fmt.Errorf("failed to parse URL: %w", err), gemini.StatusBadRequest)

}

- logging.LogDebug("%s %s normalized URL path: %s", connId, conn.RemoteAddr(), url.Path)

+ logger.Debug("normalized URL path", "id", connId, "remoteAddr", conn.RemoteAddr(), "path", url.Path)

err = checkRequestURL(url)

if err != nil {

@@ -64,31 +68,27 @@ func GenerateResponse(conn *tls.Conn, connId string, input string) ([]byte, erro

serverRootPath := config.CONFIG.RootPath

localPath, err := calculateLocalPath(url.Path, serverRootPath)

if err != nil {

- return nil, xerrors.NewError(err, gemini.StatusBadRequest, "Invalid path", false)

+ return nil, apperrors.NewGeminiError(err, gemini.StatusBadRequest)

}

- logging.LogDebug("%s %s request file path: %s", connId, conn.RemoteAddr(), localPath)

+ logger.Debug("request path", "id", connId, "remoteAddr", conn.RemoteAddr(), "local path", localPath)

// Get file/directory information

info, err := os.Stat(localPath)

- if errors.Is(err, os.ErrNotExist) || errors.Is(err, os.ErrPermission) {

- return nil, xerrors.NewError(fmt.Errorf("%s %s failed to access path: %w", connId, conn.RemoteAddr(), err), gemini.StatusNotFound, "Not found or access denied", false)

- } else if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("%s %s failed to access path: %w", connId, conn.RemoteAddr(), err), gemini.StatusNotFound, "Path access failed", false)

+ if err != nil {

+ return nil, apperrors.NewGeminiError(fmt.Errorf("failed to access path: %w", err), gemini.StatusNotFound)

}

// Handle directory.

if info.IsDir() {

- return generateResponseDir(conn, connId, url, localPath)

+ return generateResponseDir(localPath)

}

- return generateResponseFile(conn, connId, url, localPath)

+ return generateResponseFile(localPath)

}

-func generateResponseFile(conn *tls.Conn, connId string, url *gemini.URL, localPath string) ([]byte, error) {

+func generateResponseFile(localPath string) ([]byte, error) {

data, err := os.ReadFile(localPath)

- if errors.Is(err, os.ErrNotExist) || errors.Is(err, os.ErrPermission) {

- return nil, xerrors.NewError(fmt.Errorf("%s %s failed to access path: %w", connId, conn.RemoteAddr(), err), gemini.StatusNotFound, "Path access failed", false)

- } else if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("%s %s failed to read file: %w", connId, conn.RemoteAddr(), err), gemini.StatusNotFound, "Path access failed", false)

+ if err != nil {

+ return nil, apperrors.NewGeminiError(fmt.Errorf("failed to access path: %w", err), gemini.StatusNotFound)

}

var mimeType string

@@ -102,10 +102,10 @@ func generateResponseFile(conn *tls.Conn, connId string, url *gemini.URL, localP

return response, nil

}

-func generateResponseDir(conn *tls.Conn, connId string, url *gemini.URL, localPath string) (output []byte, err error) {

+func generateResponseDir(localPath string) (output []byte, err error) {

entries, err := os.ReadDir(localPath)

if err != nil {

- return nil, xerrors.NewError(fmt.Errorf("%s %s failed to read directory: %w", connId, conn.RemoteAddr(), err), gemini.StatusNotFound, "Directory access failed", false)

+ return nil, apperrors.NewGeminiError(fmt.Errorf("failed to access path: %w", err), gemini.StatusNotFound)

}

if config.CONFIG.DirIndexingEnabled {

@@ -113,27 +113,27 @@ func generateResponseDir(conn *tls.Conn, connId string, url *gemini.URL, localPa

contents = append(contents, "Directory index:\n\n")

contents = append(contents, "=> ../\n")

for _, entry := range entries {

+ // URL-encode entry names for safety

+ safeName := url.PathEscape(entry.Name())

if entry.IsDir() {

- contents = append(contents, fmt.Sprintf("=> %s/\n", entry.Name()))

+ contents = append(contents, fmt.Sprintf("=> %s/\n", safeName))

} else {

- contents = append(contents, fmt.Sprintf("=> %s\n", entry.Name()))

+ contents = append(contents, fmt.Sprintf("=> %s\n", safeName))

}

}

data := []byte(strings.Join(contents, ""))

headerBytes := []byte(fmt.Sprintf("%d text/gemini; lang=en\r\n", gemini.StatusSuccess))

response := append(headerBytes, data...)

return response, nil

- } else {

- filePath := path.Join(localPath, "index.gmi")

- return generateResponseFile(conn, connId, url, filePath)

-

}

+ filePath := filepath.Join(localPath, "index.gmi")

+ return generateResponseFile(filePath)

}

func calculateLocalPath(input string, basePath string) (string, error) {

// Check for invalid characters early

if strings.ContainsAny(input, "\\") {

- return "", xerrors.NewError(fmt.Errorf("invalid characters in path: %s", input), gemini.StatusBadRequest, "Invalid path", false)

+ return "", apperrors.NewGeminiError(fmt.Errorf("invalid characters in path: %s", input), gemini.StatusBadRequest)

}

// If IsLocal(path) returns true, then Join(base, path)

@@ -149,7 +149,7 @@ func calculateLocalPath(input string, basePath string) (string, error) {

localPath, err := filepath.Localize(filePath)

if err != nil || !filepath.IsLocal(localPath) {

- return "", xerrors.NewError(fmt.Errorf("could not construct local path from %s: %s", input, err), gemini.StatusBadRequest, "Invalid path", false)

+ return "", apperrors.NewGeminiError(fmt.Errorf("could not construct local path from %s: %s", input, err), gemini.StatusBadRequest)

}

filePath = path.Join(basePath, localPath)

diff --git a/srv/index.gmi b/srv/index.gmi

index e69de29..66a1b33 100644

--- a/srv/index.gmi

+++ b/srv/index.gmi

@@ -0,0 +1,3 @@

+# Hello world!

+

+This is a test gemini file.

diff --git a/vendor/git.antanst.com/antanst/uid/README.md b/vendor/git.antanst.com/antanst/uid/README.md

new file mode 100644

index 0000000..d4f1653

--- /dev/null

+++ b/vendor/git.antanst.com/antanst/uid/README.md

@@ -0,0 +1,5 @@

+# UID

+

+This project generates a reasonably secure and convenient UID.

+

+Borrows code from https://github.com/matoous/go-nanoid

diff --git a/vendor/git.antanst.com/antanst/uid/uid.go b/vendor/git.antanst.com/antanst/uid/uid.go

new file mode 100644

index 0000000..9896e5e

--- /dev/null

+++ b/vendor/git.antanst.com/antanst/uid/uid.go

@@ -0,0 +1,68 @@

+package uid

+

+import (

+ "crypto/rand"

+ "errors"

+ "math"

+)

+

+// UID is a high level function that returns a reasonably secure UID.

+// Only alphanumeric characters except 'o','O' and 'l'

+func UID() string {

+ id, err := Generate("abcdefghijkmnpqrstuvwxyzABCDEFGHIJKLMNPQRSTUVWXYZ0123456789", 20)

+ if err != nil {

+ panic(err)

+ }

+ return id

+}

+

+// Generate is a low-level function to change alphabet and ID size.

+// Taken from go-nanoid project

+func Generate(alphabet string, size int) (string, error) {

+ chars := []rune(alphabet)

+

+ if len(alphabet) == 0 || len(alphabet) > 255 {

+ return "", errors.New("alphabet must not be empty and contain no more than 255 chars")

+ }

+ if size <= 0 {

+ return "", errors.New("size must be positive integer")

+ }

+

+ mask := getMask(len(chars))

+ // estimate how many random bytes we will need for the ID, we might actually need more but this is tradeoff

+ // between average case and worst case

+ ceilArg := 1.6 * float64(mask*size) / float64(len(alphabet))

+ step := int(math.Ceil(ceilArg))

+

+ id := make([]rune, size)

+ bytes := make([]byte, step)

+ for j := 0; ; {

+ _, err := rand.Read(bytes)

+ if err != nil {

+ return "", err

+ }

+ for i := 0; i < step; i++ {

+ currByte := bytes[i] & byte(mask)

+ if currByte < byte(len(chars)) {

+ id[j] = chars[currByte]

+ j++

+ if j == size {

+ return string(id[:size]), nil

+ }

+ }

+ }

+ }

+}

+

+// getMask generates bit mask used to obtain bits from the random bytes that are used to get index of random character

+// from the alphabet. Example: if the alphabet has 6 = (110)_2 characters it is sufficient to use mask 7 = (111)_2

+// Taken from go-nanoid project

+func getMask(alphabetSize int) int {

+ for i := 1; i <= 8; i++ {

+ mask := (2 << uint(i)) - 1

+ if mask >= alphabetSize-1 {

+ return mask

+ }

+ }

+ return 0

+}

diff --git a/vendor/github.com/gabriel-vasile/mimetype/.gitattributes b/vendor/github.com/gabriel-vasile/mimetype/.gitattributes

new file mode 100644

index 0000000..0cc26ec

--- /dev/null

+++ b/vendor/github.com/gabriel-vasile/mimetype/.gitattributes

@@ -0,0 +1 @@

+testdata/* linguist-vendored

diff --git a/vendor/github.com/gabriel-vasile/mimetype/.golangci.yml b/vendor/github.com/gabriel-vasile/mimetype/.golangci.yml

new file mode 100644

index 0000000..f2058cc

--- /dev/null

+++ b/vendor/github.com/gabriel-vasile/mimetype/.golangci.yml

@@ -0,0 +1,5 @@

+version: "2"

+linters:

+ exclusions:

+ presets:

+ - std-error-handling

diff --git a/vendor/github.com/gabriel-vasile/mimetype/LICENSE b/vendor/github.com/gabriel-vasile/mimetype/LICENSE

new file mode 100644

index 0000000..13b61da

--- /dev/null

+++ b/vendor/github.com/gabriel-vasile/mimetype/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2018 Gabriel Vasile

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/vendor/github.com/gabriel-vasile/mimetype/README.md b/vendor/github.com/gabriel-vasile/mimetype/README.md

new file mode 100644

index 0000000..f28f56c

--- /dev/null

+++ b/vendor/github.com/gabriel-vasile/mimetype/README.md

@@ -0,0 +1,103 @@

+

+ mimetype

+

+

+

+ A package for detecting MIME types and extensions based on magic numbers

+

+

+ Goroutine safe, extensible, no C bindings

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+## Features

+- fast and precise MIME type and file extension detection

+- long list of [supported MIME types](supported_mimes.md)

+- possibility to [extend](https://pkg.go.dev/github.com/gabriel-vasile/mimetype#example-package-Extend) with other file formats

+- common file formats are prioritized

+- [text vs. binary files differentiation](https://pkg.go.dev/github.com/gabriel-vasile/mimetype#example-package-TextVsBinary)

+- no external dependencies

+- safe for concurrent usage

+

+## Install

+```bash

+go get github.com/gabriel-vasile/mimetype

+```

+

+## Usage

+```go

+mtype := mimetype.Detect([]byte)

+// OR

+mtype, err := mimetype.DetectReader(io.Reader)

+// OR

+mtype, err := mimetype.DetectFile("/path/to/file")

+fmt.Println(mtype.String(), mtype.Extension())

+```

+See the [runnable Go Playground examples](https://pkg.go.dev/github.com/gabriel-vasile/mimetype#pkg-overview).

+

+Caution: only use libraries like **mimetype** as a last resort. Content type detection

+using magic numbers is slow, inaccurate, and non-standard. Most of the times

+protocols have methods for specifying such metadata; e.g., `Content-Type` header

+in HTTP and SMTP.

+

+## FAQ

+Q: My file is in the list of [supported MIME types](supported_mimes.md) but

+it is not correctly detected. What should I do?

+

+A: Some file formats (often Microsoft Office documents) keep their signatures

+towards the end of the file. Try increasing the number of bytes used for detection

+with:

+```go

+mimetype.SetLimit(1024*1024) // Set limit to 1MB.

+// or

+mimetype.SetLimit(0) // No limit, whole file content used.

+mimetype.DetectFile("file.doc")

+```

+If increasing the limit does not help, please

+[open an issue](https://github.com/gabriel-vasile/mimetype/issues/new?assignees=&labels=&template=mismatched-mime-type-detected.md&title=).

+

+## Tests

+In addition to unit tests,

+[mimetype_tests](https://github.com/gabriel-vasile/mimetype_tests) compares the

+library with the [Unix file utility](https://en.wikipedia.org/wiki/File_(command))

+for around 50 000 sample files. Check the latest comparison results

+[here](https://github.com/gabriel-vasile/mimetype_tests/actions).

+

+## Benchmarks

+Benchmarks for each file format are performed when a PR is open. The results can

+be seen on the [workflows page](https://github.com/gabriel-vasile/mimetype/actions/workflows/benchmark.yml).

+Performance improvements are welcome but correctness is prioritized.

+

+## Structure

+**mimetype** uses a hierarchical structure to keep the MIME type detection logic.

+This reduces the number of calls needed for detecting the file type. The reason

+behind this choice is that there are file formats used as containers for other

+file formats. For example, Microsoft Office files are just zip archives,

+containing specific metadata files. Once a file has been identified as a

+zip, there is no need to check if it is a text file, but it is worth checking if

+it is an Microsoft Office file.

+

+To prevent loading entire files into memory, when detecting from a

+[reader](https://pkg.go.dev/github.com/gabriel-vasile/mimetype#DetectReader)

+or from a [file](https://pkg.go.dev/github.com/gabriel-vasile/mimetype#DetectFile)

+**mimetype** limits itself to reading only the header of the input.

+

+

+

+

+

+

+

+

+

+Package `tint` implements a zero-dependency [`slog.Handler`](https://pkg.go.dev/log/slog#Handler)

+that writes tinted (colorized) logs. Its output format is inspired by the `zerolog.ConsoleWriter` and

+[`slog.TextHandler`](https://pkg.go.dev/log/slog#TextHandler).

+

+The output format can be customized using [`Options`](https://pkg.go.dev/github.com/lmittmann/tint#Options)

+which is a drop-in replacement for [`slog.HandlerOptions`](https://pkg.go.dev/log/slog#HandlerOptions).

+

+```

+go get github.com/lmittmann/tint

+```

+

+## Usage

+

+```go

+w := os.Stderr

+

+// Create a new logger

+logger := slog.New(tint.NewHandler(w, nil))

+

+// Set global logger with custom options

+slog.SetDefault(slog.New(

+ tint.NewHandler(w, &tint.Options{

+ Level: slog.LevelDebug,

+ TimeFormat: time.Kitchen,

+ }),

+))

+```

+

+### Customize Attributes

+

+`ReplaceAttr` can be used to alter or drop attributes. If set, it is called on

+each non-group attribute before it is logged. See [`slog.HandlerOptions`](https://pkg.go.dev/log/slog#HandlerOptions)

+for details.

+

+```go

+// Create a new logger with a custom TRACE level:

+const LevelTrace = slog.LevelDebug - 4

+

+w := os.Stderr

+logger := slog.New(tint.NewHandler(w, &tint.Options{

+ Level: LevelTrace,

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Key == slog.LevelKey && len(groups) == 0 {

+ level, ok := a.Value.Any().(slog.Level)

+ if ok && level <= LevelTrace {

+ return tint.Attr(13, slog.String(a.Key, "TRC"))

+ }

+ }

+ return a

+ },

+}))

+```

+

+```go

+// Create a new logger that doesn't write the time

+w := os.Stderr

+logger := slog.New(

+ tint.NewHandler(w, &tint.Options{

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Key == slog.TimeKey && len(groups) == 0 {

+ return slog.Attr{}

+ }

+ return a

+ },

+ }),

+)

+```

+

+```go

+// Create a new logger that writes all errors in red

+w := os.Stderr

+logger := slog.New(

+ tint.NewHandler(w, &tint.Options{

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Value.Kind() == slog.KindAny {

+ if _, ok := a.Value.Any().(error); ok {

+ return tint.Attr(9, a)

+ }

+ }

+ return a

+ },

+ }),

+)

+```

+

+### Automatically Enable Colors

+

+Colors are enabled by default. Use the `Options.NoColor` field to disable

+color output. To automatically enable colors based on terminal capabilities, use

+e.g., the [`go-isatty`](https://github.com/mattn/go-isatty) package:

+

+```go

+w := os.Stderr

+logger := slog.New(

+ tint.NewHandler(w, &tint.Options{

+ NoColor: !isatty.IsTerminal(w.Fd()),

+ }),

+)

+```

+

+### Windows Support

+

+Color support on Windows can be added by using e.g., the

+[`go-colorable`](https://github.com/mattn/go-colorable) package:

+

+```go

+w := os.Stderr

+logger := slog.New(

+ tint.NewHandler(colorable.NewColorable(w), nil),

+)

+```

diff --git a/vendor/github.com/lmittmann/tint/buffer.go b/vendor/github.com/lmittmann/tint/buffer.go

new file mode 100644

index 0000000..93668fc

--- /dev/null

+++ b/vendor/github.com/lmittmann/tint/buffer.go

@@ -0,0 +1,40 @@

+package tint

+

+import "sync"

+

+type buffer []byte

+

+var bufPool = sync.Pool{

+ New: func() any {

+ b := make(buffer, 0, 1024)

+ return (*buffer)(&b)

+ },

+}

+

+func newBuffer() *buffer {

+ return bufPool.Get().(*buffer)

+}

+

+func (b *buffer) Free() {

+ // To reduce peak allocation, return only smaller buffers to the pool.

+ const maxBufferSize = 16 << 10

+ if cap(*b) <= maxBufferSize {

+ *b = (*b)[:0]

+ bufPool.Put(b)

+ }

+}

+

+func (b *buffer) Write(bytes []byte) (int, error) {

+ *b = append(*b, bytes...)

+ return len(bytes), nil

+}

+

+func (b *buffer) WriteByte(char byte) error {

+ *b = append(*b, char)

+ return nil

+}

+

+func (b *buffer) WriteString(str string) (int, error) {

+ *b = append(*b, str...)

+ return len(str), nil

+}

diff --git a/vendor/github.com/lmittmann/tint/handler.go b/vendor/github.com/lmittmann/tint/handler.go

new file mode 100644

index 0000000..46cf687

--- /dev/null

+++ b/vendor/github.com/lmittmann/tint/handler.go

@@ -0,0 +1,754 @@

+/*

+Package tint implements a zero-dependency [slog.Handler] that writes tinted

+(colorized) logs. The output format is inspired by the [zerolog.ConsoleWriter]

+and [slog.TextHandler].

+

+The output format can be customized using [Options], which is a drop-in

+replacement for [slog.HandlerOptions].

+

+# Customize Attributes

+

+Options.ReplaceAttr can be used to alter or drop attributes. If set, it is

+called on each non-group attribute before it is logged.

+See [slog.HandlerOptions] for details.

+

+Create a new logger with a custom TRACE level:

+

+ const LevelTrace = slog.LevelDebug - 4

+

+ w := os.Stderr

+ logger := slog.New(tint.NewHandler(w, &tint.Options{

+ Level: LevelTrace,

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Key == slog.LevelKey && len(groups) == 0 {

+ level, ok := a.Value.Any().(slog.Level)

+ if ok && level <= LevelTrace {

+ return tint.Attr(13, slog.String(a.Key, "TRC"))

+ }

+ }

+ return a

+ },

+ }))

+

+Create a new logger that doesn't write the time:

+

+ w := os.Stderr

+ logger := slog.New(

+ tint.NewHandler(w, &tint.Options{

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Key == slog.TimeKey && len(groups) == 0 {

+ return slog.Attr{}

+ }

+ return a

+ },

+ }),

+ )

+

+Create a new logger that writes all errors in red:

+

+ w := os.Stderr

+ logger := slog.New(

+ tint.NewHandler(w, &tint.Options{

+ ReplaceAttr: func(groups []string, a slog.Attr) slog.Attr {

+ if a.Value.Kind() == slog.KindAny {

+ if _, ok := a.Value.Any().(error); ok {

+ return tint.Attr(9, a)

+ }

+ }

+ return a

+ },

+ }),

+ )

+

+# Automatically Enable Colors

+

+Colors are enabled by default. Use the Options.NoColor field to disable

+color output. To automatically enable colors based on terminal capabilities, use

+e.g., the [go-isatty] package:

+

+ w := os.Stderr

+ logger := slog.New(

+ tint.NewHandler(w, &tint.Options{

+ NoColor: !isatty.IsTerminal(w.Fd()),

+ }),

+ )

+

+# Windows Support

+

+Color support on Windows can be added by using e.g., the [go-colorable] package:

+

+ w := os.Stderr

+ logger := slog.New(

+ tint.NewHandler(colorable.NewColorable(w), nil),

+ )

+

+[zerolog.ConsoleWriter]: https://pkg.go.dev/github.com/rs/zerolog#ConsoleWriter

+[go-isatty]: https://pkg.go.dev/github.com/mattn/go-isatty

+[go-colorable]: https://pkg.go.dev/github.com/mattn/go-colorable

+*/

+package tint

+

+import (

+ "context"

+ "encoding"

+ "fmt"

+ "io"

+ "log/slog"

+ "path/filepath"

+ "reflect"

+ "runtime"

+ "strconv"

+ "strings"

+ "sync"

+ "time"

+ "unicode"

+ "unicode/utf8"

+)

+

+const (

+ // ANSI modes

+ ansiEsc = '\u001b'

+ ansiReset = "\u001b[0m"

+ ansiFaint = "\u001b[2m"

+ ansiResetFaint = "\u001b[22m"

+ ansiBrightRed = "\u001b[91m"

+ ansiBrightGreen = "\u001b[92m"

+ ansiBrightYellow = "\u001b[93m"

+

+ errKey = "err"

+

+ defaultLevel = slog.LevelInfo

+ defaultTimeFormat = time.StampMilli

+)

+

+// Options for a slog.Handler that writes tinted logs. A zero Options consists

+// entirely of default values.

+//

+// Options can be used as a drop-in replacement for [slog.HandlerOptions].

+type Options struct {

+ // Enable source code location (Default: false)

+ AddSource bool

+

+ // Minimum level to log (Default: slog.LevelInfo)

+ Level slog.Leveler

+

+ // ReplaceAttr is called to rewrite each non-group attribute before it is logged.

+ // See https://pkg.go.dev/log/slog#HandlerOptions for details.

+ ReplaceAttr func(groups []string, attr slog.Attr) slog.Attr

+

+ // Time format (Default: time.StampMilli)

+ TimeFormat string

+

+ // Disable color (Default: false)

+ NoColor bool

+}

+

+func (o *Options) setDefaults() {

+ if o.Level == nil {

+ o.Level = defaultLevel

+ }

+ if o.TimeFormat == "" {

+ o.TimeFormat = defaultTimeFormat

+ }

+}

+

+// NewHandler creates a [slog.Handler] that writes tinted logs to Writer w,

+// using the default options. If opts is nil, the default options are used.

+func NewHandler(w io.Writer, opts *Options) slog.Handler {

+ if opts == nil {

+ opts = &Options{}

+ }

+ opts.setDefaults()

+

+ return &handler{

+ mu: &sync.Mutex{},

+ w: w,

+ opts: *opts,

+ }

+}

+

+// handler implements a [slog.Handler].

+type handler struct {

+ attrsPrefix string

+ groupPrefix string

+ groups []string

+

+ mu *sync.Mutex

+ w io.Writer

+

+ opts Options

+}

+

+func (h *handler) clone() *handler {

+ return &handler{

+ attrsPrefix: h.attrsPrefix,

+ groupPrefix: h.groupPrefix,

+ groups: h.groups,

+ mu: h.mu, // mutex shared among all clones of this handler

+ w: h.w,

+ opts: h.opts,

+ }

+}

+

+func (h *handler) Enabled(_ context.Context, level slog.Level) bool {

+ return level >= h.opts.Level.Level()

+}

+

+func (h *handler) Handle(_ context.Context, r slog.Record) error {

+ // get a buffer from the sync pool

+ buf := newBuffer()

+ defer buf.Free()

+

+ rep := h.opts.ReplaceAttr

+

+ // write time

+ if !r.Time.IsZero() {

+ val := r.Time.Round(0) // strip monotonic to match Attr behavior

+ if rep == nil {

+ h.appendTintTime(buf, r.Time, -1)

+ buf.WriteByte(' ')

+ } else if a := rep(nil /* groups */, slog.Time(slog.TimeKey, val)); a.Key != "" {

+ val, color := h.resolve(a.Value)

+ if val.Kind() == slog.KindTime {

+ h.appendTintTime(buf, val.Time(), color)

+ } else {

+ h.appendTintValue(buf, val, false, color, true)

+ }

+ buf.WriteByte(' ')

+ }

+ }

+

+ // write level

+ if rep == nil {

+ h.appendTintLevel(buf, r.Level, -1)

+ buf.WriteByte(' ')

+ } else if a := rep(nil /* groups */, slog.Any(slog.LevelKey, r.Level)); a.Key != "" {

+ val, color := h.resolve(a.Value)

+ if val.Kind() == slog.KindAny {

+ if lvlVal, ok := val.Any().(slog.Level); ok {

+ h.appendTintLevel(buf, lvlVal, color)

+ } else {

+ h.appendTintValue(buf, val, false, color, false)

+ }

+ } else {

+ h.appendTintValue(buf, val, false, color, false)

+ }

+ buf.WriteByte(' ')

+ }

+

+ // write source

+ if h.opts.AddSource {

+ fs := runtime.CallersFrames([]uintptr{r.PC})

+ f, _ := fs.Next()

+ if f.File != "" {

+ src := &slog.Source{

+ Function: f.Function,

+ File: f.File,

+ Line: f.Line,

+ }

+

+ if rep == nil {

+ if h.opts.NoColor {

+ appendSource(buf, src)

+ } else {

+ buf.WriteString(ansiFaint)

+ appendSource(buf, src)

+ buf.WriteString(ansiReset)

+ }

+ buf.WriteByte(' ')

+ } else if a := rep(nil /* groups */, slog.Any(slog.SourceKey, src)); a.Key != "" {

+ val, color := h.resolve(a.Value)

+ h.appendTintValue(buf, val, false, color, true)

+ buf.WriteByte(' ')

+ }

+ }

+ }

+

+ // write message

+ if rep == nil {

+ buf.WriteString(r.Message)

+ buf.WriteByte(' ')

+ } else if a := rep(nil /* groups */, slog.String(slog.MessageKey, r.Message)); a.Key != "" {

+ val, color := h.resolve(a.Value)

+ h.appendTintValue(buf, val, false, color, false)

+ buf.WriteByte(' ')

+ }

+

+ // write handler attributes

+ if len(h.attrsPrefix) > 0 {

+ buf.WriteString(h.attrsPrefix)

+ }

+

+ // write attributes

+ r.Attrs(func(attr slog.Attr) bool {

+ h.appendAttr(buf, attr, h.groupPrefix, h.groups)

+ return true

+ })

+

+ if len(*buf) == 0 {

+ buf.WriteByte('\n')

+ } else {

+ (*buf)[len(*buf)-1] = '\n' // replace last space with newline

+ }

+

+ h.mu.Lock()

+ defer h.mu.Unlock()

+

+ _, err := h.w.Write(*buf)

+ return err

+}

+

+func (h *handler) WithAttrs(attrs []slog.Attr) slog.Handler {

+ if len(attrs) == 0 {

+ return h

+ }

+ h2 := h.clone()

+

+ buf := newBuffer()

+ defer buf.Free()

+

+ // write attributes to buffer

+ for _, attr := range attrs {

+ h.appendAttr(buf, attr, h.groupPrefix, h.groups)

+ }

+ h2.attrsPrefix = h.attrsPrefix + string(*buf)

+ return h2

+}

+

+func (h *handler) WithGroup(name string) slog.Handler {

+ if name == "" {

+ return h

+ }

+ h2 := h.clone()

+ h2.groupPrefix += name + "."

+ h2.groups = append(h2.groups, name)

+ return h2

+}

+

+func (h *handler) appendTintTime(buf *buffer, t time.Time, color int16) {

+ if h.opts.NoColor {

+ *buf = t.AppendFormat(*buf, h.opts.TimeFormat)

+ } else {

+ if color >= 0 {

+ appendAnsi(buf, uint8(color), true)

+ } else {

+ buf.WriteString(ansiFaint)

+ }

+ *buf = t.AppendFormat(*buf, h.opts.TimeFormat)

+ buf.WriteString(ansiReset)

+ }

+}

+

+func (h *handler) appendTintLevel(buf *buffer, level slog.Level, color int16) {

+ str := func(base string, val slog.Level) []byte {

+ if val == 0 {

+ return []byte(base)

+ } else if val > 0 {

+ return strconv.AppendInt(append([]byte(base), '+'), int64(val), 10)

+ }

+ return strconv.AppendInt([]byte(base), int64(val), 10)

+ }

+

+ if !h.opts.NoColor {

+ if color >= 0 {

+ appendAnsi(buf, uint8(color), false)

+ } else {

+ switch {

+ case level < slog.LevelInfo:

+ case level < slog.LevelWarn:

+ buf.WriteString(ansiBrightGreen)

+ case level < slog.LevelError:

+ buf.WriteString(ansiBrightYellow)

+ default:

+ buf.WriteString(ansiBrightRed)

+ }

+ }

+ }

+

+ switch {

+ case level < slog.LevelInfo:

+ buf.Write(str("DBG", level-slog.LevelDebug))

+ case level < slog.LevelWarn:

+ buf.Write(str("INF", level-slog.LevelInfo))

+ case level < slog.LevelError:

+ buf.Write(str("WRN", level-slog.LevelWarn))

+ default:

+ buf.Write(str("ERR", level-slog.LevelError))

+ }

+

+ if !h.opts.NoColor && level >= slog.LevelInfo {

+ buf.WriteString(ansiReset)

+ }

+}

+

+func appendSource(buf *buffer, src *slog.Source) {

+ dir, file := filepath.Split(src.File)

+

+ buf.WriteString(filepath.Join(filepath.Base(dir), file))

+ buf.WriteByte(':')

+ *buf = strconv.AppendInt(*buf, int64(src.Line), 10)

+}

+

+func (h *handler) resolve(val slog.Value) (resolvedVal slog.Value, color int16) {

+ if !h.opts.NoColor && val.Kind() == slog.KindLogValuer {

+ if tintVal, ok := val.Any().(tintValue); ok {

+ return tintVal.Value.Resolve(), int16(tintVal.Color)

+ }

+ }

+ return val.Resolve(), -1

+}

+

+func (h *handler) appendAttr(buf *buffer, attr slog.Attr, groupsPrefix string, groups []string) {

+ var color int16 // -1 if no color

+ attr.Value, color = h.resolve(attr.Value)

+ if rep := h.opts.ReplaceAttr; rep != nil && attr.Value.Kind() != slog.KindGroup {

+ attr = rep(groups, attr)

+ var colorRep int16

+ attr.Value, colorRep = h.resolve(attr.Value)

+ if colorRep >= 0 {

+ color = colorRep

+ }

+ }

+

+ if attr.Equal(slog.Attr{}) {

+ return

+ }

+

+ if attr.Value.Kind() == slog.KindGroup {

+ if attr.Key != "" {

+ groupsPrefix += attr.Key + "."

+ groups = append(groups, attr.Key)

+ }

+ for _, groupAttr := range attr.Value.Group() {

+ h.appendAttr(buf, groupAttr, groupsPrefix, groups)

+ }

+ return

+ }

+

+ if h.opts.NoColor {

+ h.appendKey(buf, attr.Key, groupsPrefix)

+ h.appendValue(buf, attr.Value, true)

+ } else {

+ if color >= 0 {

+ appendAnsi(buf, uint8(color), true)

+ h.appendKey(buf, attr.Key, groupsPrefix)

+ buf.WriteString(ansiResetFaint)

+ h.appendValue(buf, attr.Value, true)

+ buf.WriteString(ansiReset)

+ } else {

+ buf.WriteString(ansiFaint)

+ h.appendKey(buf, attr.Key, groupsPrefix)

+ buf.WriteString(ansiReset)

+ h.appendValue(buf, attr.Value, true)

+ }

+ }

+ buf.WriteByte(' ')

+}

+

+func (h *handler) appendKey(buf *buffer, key, groups string) {

+ appendString(buf, groups+key, true, !h.opts.NoColor)

+ buf.WriteByte('=')

+}

+

+func (h *handler) appendValue(buf *buffer, v slog.Value, quote bool) {

+ switch v.Kind() {

+ case slog.KindString:

+ appendString(buf, v.String(), quote, !h.opts.NoColor)

+ case slog.KindInt64:

+ *buf = strconv.AppendInt(*buf, v.Int64(), 10)

+ case slog.KindUint64:

+ *buf = strconv.AppendUint(*buf, v.Uint64(), 10)

+ case slog.KindFloat64:

+ *buf = strconv.AppendFloat(*buf, v.Float64(), 'g', -1, 64)

+ case slog.KindBool:

+ *buf = strconv.AppendBool(*buf, v.Bool())

+ case slog.KindDuration:

+ appendString(buf, v.Duration().String(), quote, !h.opts.NoColor)

+ case slog.KindTime:

+ *buf = appendRFC3339Millis(*buf, v.Time())

+ case slog.KindAny:

+ defer func() {

+ // Copied from log/slog/handler.go.

+ if r := recover(); r != nil {

+ // If it panics with a nil pointer, the most likely cases are

+ // an encoding.TextMarshaler or error fails to guard against nil,

+ // in which case "" seems to be the feasible choice.

+ //

+ // Adapted from the code in fmt/print.go.

+ if v := reflect.ValueOf(v.Any()); v.Kind() == reflect.Pointer && v.IsNil() {

+ buf.WriteString("")

+ return

+ }

+

+ // Otherwise just print the original panic message.

+ appendString(buf, fmt.Sprintf("!PANIC: %v", r), true, !h.opts.NoColor)

+ }

+ }()

+

+ switch cv := v.Any().(type) {

+ case encoding.TextMarshaler:

+ data, err := cv.MarshalText()

+ if err != nil {

+ break

+ }

+ appendString(buf, string(data), quote, !h.opts.NoColor)

+ case *slog.Source:

+ appendSource(buf, cv)

+ default:

+ appendString(buf, fmt.Sprintf("%+v", cv), quote, !h.opts.NoColor)

+ }

+ }

+}

+

+func (h *handler) appendTintValue(buf *buffer, val slog.Value, quote bool, color int16, faint bool) {

+ if h.opts.NoColor {

+ h.appendValue(buf, val, quote)

+ } else {

+ if color >= 0 {

+ appendAnsi(buf, uint8(color), faint)

+ } else if faint {

+ buf.WriteString(ansiFaint)

+ }

+ h.appendValue(buf, val, quote)

+ if color >= 0 || faint {

+ buf.WriteString(ansiReset)

+ }

+ }

+}

+

+// Copied from log/slog/handler.go.

+func appendRFC3339Millis(b []byte, t time.Time) []byte {

+ // Format according to time.RFC3339Nano since it is highly optimized,

+ // but truncate it to use millisecond resolution.

+ // Unfortunately, that format trims trailing 0s, so add 1/10 millisecond

+ // to guarantee that there are exactly 4 digits after the period.

+ const prefixLen = len("2006-01-02T15:04:05.000")

+ n := len(b)

+ t = t.Truncate(time.Millisecond).Add(time.Millisecond / 10)

+ b = t.AppendFormat(b, time.RFC3339Nano)

+ b = append(b[:n+prefixLen], b[n+prefixLen+1:]...) // drop the 4th digit

+ return b

+}

+

+func appendAnsi(buf *buffer, color uint8, faint bool) {

+ buf.WriteString("\u001b[")

+ if faint {

+ buf.WriteString("2;")

+ }

+ if color < 8 {

+ *buf = strconv.AppendUint(*buf, uint64(color)+30, 10)

+ } else if color < 16 {

+ *buf = strconv.AppendUint(*buf, uint64(color)+82, 10)

+ } else {

+ buf.WriteString("38;5;")

+ *buf = strconv.AppendUint(*buf, uint64(color), 10)

+ }

+ buf.WriteByte('m')

+}

+

+func appendString(buf *buffer, s string, quote, color bool) {

+ if quote && !color {

+ // trim ANSI escape sequences

+ var inEscape bool

+ s = cut(s, func(r rune) bool {

+ if r == ansiEsc {

+ inEscape = true

+ } else if inEscape && unicode.IsLetter(r) {

+ inEscape = false

+ return true

+ }

+

+ return inEscape

+ })

+ }

+

+ quote = quote && needsQuoting(s)

+ switch {

+ case color && quote:

+ s = strconv.Quote(s)

+ s = strings.ReplaceAll(s, `\x1b`, string(ansiEsc))

+ buf.WriteString(s)

+ case !color && quote:

+ *buf = strconv.AppendQuote(*buf, s)

+ default:

+ buf.WriteString(s)

+ }

+}

+

+func cut(s string, f func(r rune) bool) string {

+ var res []rune

+ for i := 0; i < len(s); {

+ r, size := utf8.DecodeRuneInString(s[i:])

+ if r == utf8.RuneError {

+ break

+ }

+ if !f(r) {

+ res = append(res, r)

+ }

+ i += size

+ }

+ return string(res)

+}

+

+// Copied from log/slog/text_handler.go.

+func needsQuoting(s string) bool {

+ if len(s) == 0 {

+ return true

+ }

+ for i := 0; i < len(s); {

+ b := s[i]

+ if b < utf8.RuneSelf {

+ // Quote anything except a backslash that would need quoting in a

+ // JSON string, as well as space and '='

+ if b != '\\' && (b == ' ' || b == '=' || !safeSet[b]) {

+ return true

+ }

+ i++

+ continue

+ }

+ r, size := utf8.DecodeRuneInString(s[i:])

+ if r == utf8.RuneError || unicode.IsSpace(r) || !unicode.IsPrint(r) {

+ return true

+ }

+ i += size

+ }

+ return false

+}

+

+// Copied from log/slog/json_handler.go.

+//

+// safeSet is extended by the ANSI escape code "\u001b".

+var safeSet = [utf8.RuneSelf]bool{

+ ' ': true,

+ '!': true,

+ '"': false,

+ '#': true,

+ '$': true,

+ '%': true,

+ '&': true,

+ '\'': true,

+ '(': true,

+ ')': true,

+ '*': true,

+ '+': true,

+ ',': true,

+ '-': true,

+ '.': true,

+ '/': true,

+ '0': true,

+ '1': true,

+ '2': true,

+ '3': true,

+ '4': true,

+ '5': true,

+ '6': true,

+ '7': true,

+ '8': true,

+ '9': true,

+ ':': true,

+ ';': true,

+ '<': true,

+ '=': true,

+ '>': true,

+ '?': true,

+ '@': true,

+ 'A': true,

+ 'B': true,

+ 'C': true,

+ 'D': true,

+ 'E': true,

+ 'F': true,

+ 'G': true,

+ 'H': true,

+ 'I': true,

+ 'J': true,

+ 'K': true,

+ 'L': true,

+ 'M': true,

+ 'N': true,

+ 'O': true,

+ 'P': true,

+ 'Q': true,

+ 'R': true,

+ 'S': true,

+ 'T': true,

+ 'U': true,

+ 'V': true,

+ 'W': true,

+ 'X': true,

+ 'Y': true,

+ 'Z': true,

+ '[': true,

+ '\\': false,

+ ']': true,

+ '^': true,

+ '_': true,

+ '`': true,

+ 'a': true,

+ 'b': true,

+ 'c': true,

+ 'd': true,

+ 'e': true,

+ 'f': true,

+ 'g': true,

+ 'h': true,

+ 'i': true,

+ 'j': true,

+ 'k': true,

+ 'l': true,

+ 'm': true,

+ 'n': true,

+ 'o': true,

+ 'p': true,

+ 'q': true,

+ 'r': true,

+ 's': true,

+ 't': true,

+ 'u': true,

+ 'v': true,

+ 'w': true,

+ 'x': true,

+ 'y': true,

+ 'z': true,

+ '{': true,

+ '|': true,

+ '}': true,

+ '~': true,

+ '\u007f': true,

+ '\u001b': true,

+}

+

+type tintValue struct {

+ slog.Value

+ Color uint8

+}

+

+// LogValue implements the [slog.LogValuer] interface.

+func (v tintValue) LogValue() slog.Value {

+ return v.Value

+}

+

+// Err returns a tinted (colorized) [slog.Attr] that will be written in red color

+// by the [tint.Handler]. When used with any other [slog.Handler], it behaves as

+//

+// slog.Any("err", err)

+func Err(err error) slog.Attr {

+ return Attr(9, slog.Any(errKey, err))

+}

+

+// Attr returns a tinted (colorized) [slog.Attr] that will be written in the

+// specified color by the [tint.Handler]. When used with any other [slog.Handler], it behaves as a

+// plain [slog.Attr].

+//

+// Use the uint8 color value to specify the color of the attribute:

+//

+// - 0-7: standard ANSI colors

+// - 8-15: high intensity ANSI colors

+// - 16-231: 216 colors (6×6×6 cube)

+// - 232-255: grayscale from dark to light in 24 steps

+//

+// See https://en.wikipedia.org/wiki/ANSI_escape_code#8-bit

+func Attr(color uint8, attr slog.Attr) slog.Attr {

+ attr.Value = slog.AnyValue(tintValue{attr.Value, color})

+ return attr

+}

diff --git a/vendor/github.com/matoous/go-nanoid/v2/.gitignore b/vendor/github.com/matoous/go-nanoid/v2/.gitignore

new file mode 100644

index 0000000..485dee6

--- /dev/null

+++ b/vendor/github.com/matoous/go-nanoid/v2/.gitignore

@@ -0,0 +1 @@

+.idea

diff --git a/vendor/github.com/matoous/go-nanoid/v2/LICENSE b/vendor/github.com/matoous/go-nanoid/v2/LICENSE

new file mode 100644

index 0000000..7c5a779

--- /dev/null

+++ b/vendor/github.com/matoous/go-nanoid/v2/LICENSE

@@ -0,0 +1,21 @@

+The MIT License (MIT)

+

+Copyright (c) 2018 Matous Dzivjak

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in

+all copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

+THE SOFTWARE.

diff --git a/vendor/github.com/matoous/go-nanoid/v2/Makefile b/vendor/github.com/matoous/go-nanoid/v2/Makefile

new file mode 100644

index 0000000..946c643

--- /dev/null

+++ b/vendor/github.com/matoous/go-nanoid/v2/Makefile

@@ -0,0 +1,21 @@

+# Make this makefile self-documented with target `help`

+.PHONY: help

+.DEFAULT_GOAL := help

+help: ## Show help

+ @grep -Eh '^[0-9a-zA-Z_-]+:.*?## .*$$' $(MAKEFILE_LIST) | sort | awk 'BEGIN {FS = ":.*?## "}; {printf "\033[36m%-30s\033[0m %s\n", $$1, $$2}'

+

+.PHONY: lint

+lint: download ## Lint the repository with golang-ci lint

+ golangci-lint run --max-same-issues 0 --max-issues-per-linter 0 $(if $(CI),--out-format code-climate > gl-code-quality-report.json 2>golangci-stderr-output)

+

+.PHONY: test

+test: download ## Run all tests

+ go test -v

+

+.PHONY: bench

+bench: download ## Run all benchmarks

+ go test -bench=.

+

+.PHONY: download

+download: ## Download dependencies

+ go mod download

diff --git a/vendor/github.com/matoous/go-nanoid/v2/README.md b/vendor/github.com/matoous/go-nanoid/v2/README.md

new file mode 100644

index 0000000..6c54134

--- /dev/null

+++ b/vendor/github.com/matoous/go-nanoid/v2/README.md

@@ -0,0 +1,55 @@

+# Go Nanoid

+

+[](https://github.com/matoous/go-nanoid/actions)

+[](https://godoc.org/github.com/matoous/go-nanoid)

+[](https://goreportcard.com/report/github.com/matoous/go-nanoid)

+[](https://github.com/matoous/go-nanoid/issues)

+[](https://github.com/matoous/go-nanoid/LICENSE)

+

+This package is Go implementation of [ai's](https://github.com/ai) [nanoid](https://github.com/ai/nanoid)!

+

+**Safe.** It uses cryptographically strong random generator.

+

+**Compact.** It uses more symbols than UUID (`A-Za-z0-9_-`)

+and has the same number of unique options in just 22 symbols instead of 36.

+

+**Fast.** Nanoid is as fast as UUID but can be used in URLs.

+

+There's also this alternative: https://github.com/jaevor/go-nanoid.

+

+## Install

+

+Via go get tool

+

+``` bash

+$ go get github.com/matoous/go-nanoid/v2

+```

+

+## Usage

+

+Generate ID

+

+``` go

+id, err := gonanoid.New()

+```

+

+Generate ID with a custom alphabet and length

+

+``` go

+id, err := gonanoid.Generate("abcde", 54)

+```

+

+## Notice

+

+If you use Go Nanoid in your project, please let me know!

+

+If you have any issues, just feel free and open it in this repository, thanks!

+

+## Credits

+

+- [ai](https://github.com/ai) - [nanoid](https://github.com/ai/nanoid)

+- icza - his tutorial on [random strings in Go](https://stackoverflow.com/questions/22892120/how-to-generate-a-random-string-of-a-fixed-length-in-golang)

+

+## License

+

+The MIT License (MIT). Please see [License File](LICENSE.md) for more information.

diff --git a/vendor/github.com/matoous/go-nanoid/v2/gonanoid.go b/vendor/github.com/matoous/go-nanoid/v2/gonanoid.go

new file mode 100644

index 0000000..75aa3a5

--- /dev/null

+++ b/vendor/github.com/matoous/go-nanoid/v2/gonanoid.go

@@ -0,0 +1,108 @@

+package gonanoid

+

+import (

+ "crypto/rand"

+ "errors"

+ "math"

+)

+

+// defaultAlphabet is the alphabet used for ID characters by default.

+var defaultAlphabet = []rune("_-0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ")

+

+const (

+ defaultSize = 21

+)

+

+// getMask generates bit mask used to obtain bits from the random bytes that are used to get index of random character

+// from the alphabet. Example: if the alphabet has 6 = (110)_2 characters it is sufficient to use mask 7 = (111)_2

+func getMask(alphabetSize int) int {

+ for i := 1; i <= 8; i++ {

+ mask := (2 << uint(i)) - 1

+ if mask >= alphabetSize-1 {

+ return mask

+ }

+ }

+ return 0

+}

+

+// Generate is a low-level function to change alphabet and ID size.

+func Generate(alphabet string, size int) (string, error) {

+ chars := []rune(alphabet)

+

+ if len(alphabet) == 0 || len(alphabet) > 255 {

+ return "", errors.New("alphabet must not be empty and contain no more than 255 chars")

+ }

+ if size <= 0 {

+ return "", errors.New("size must be positive integer")

+ }

+

+ mask := getMask(len(chars))

+ // estimate how many random bytes we will need for the ID, we might actually need more but this is tradeoff

+ // between average case and worst case

+ ceilArg := 1.6 * float64(mask*size) / float64(len(alphabet))

+ step := int(math.Ceil(ceilArg))

+

+ id := make([]rune, size)

+ bytes := make([]byte, step)

+ for j := 0; ; {

+ _, err := rand.Read(bytes)

+ if err != nil {

+ return "", err

+ }

+ for i := 0; i < step; i++ {

+ currByte := bytes[i] & byte(mask)

+ if currByte < byte(len(chars)) {

+ id[j] = chars[currByte]

+ j++

+ if j == size {

+ return string(id[:size]), nil

+ }

+ }

+ }

+ }

+}

+

+// MustGenerate is the same as Generate but panics on error.

+func MustGenerate(alphabet string, size int) string {

+ id, err := Generate(alphabet, size)

+ if err != nil {

+ panic(err)

+ }

+ return id

+}

+

+// New generates secure URL-friendly unique ID.

+// Accepts optional parameter - length of the ID to be generated (21 by default).

+func New(l ...int) (string, error) {

+ var size int

+ switch {

+ case len(l) == 0:

+ size = defaultSize

+ case len(l) == 1:

+ size = l[0]

+ if size < 0 {

+ return "", errors.New("negative id length")

+ }

+ default:

+ return "", errors.New("unexpected parameter")

+ }

+ bytes := make([]byte, size)

+ _, err := rand.Read(bytes)

+ if err != nil {

+ return "", err

+ }

+ id := make([]rune, size)

+ for i := 0; i < size; i++ {

+ id[i] = defaultAlphabet[bytes[i]&63]

+ }

+ return string(id[:size]), nil

+}

+

+// Must is the same as New but panics on error.

+func Must(l ...int) string {

+ id, err := New(l...)

+ if err != nil {

+ panic(err)

+ }

+ return id

+}

diff --git a/vendor/modules.txt b/vendor/modules.txt

new file mode 100644

index 0000000..f6cb296

--- /dev/null

+++ b/vendor/modules.txt

@@ -0,0 +1,19 @@

+# git.antanst.com/antanst/uid v0.0.1 => ../uid

+## explicit; go 1.24.3

+git.antanst.com/antanst/uid